Another reason why I eschew viewing college sport. If this be (fair & balanced) heresy, so be it.

Friday, October 31, 2003

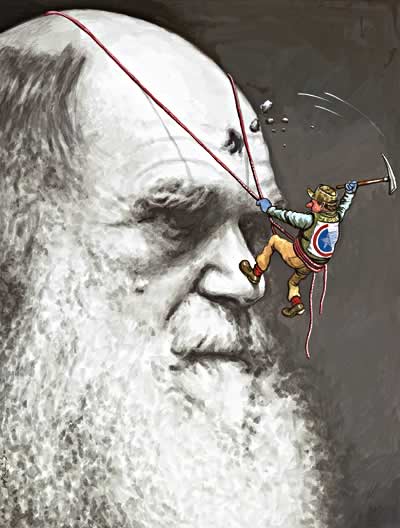

Ben Sargent on Big-Time College Football

Quo Vadis, Clio?

History never ends. I have believed that all of my adult life. Most of my students believe that history never begins or history is one damned thing after another. Ah, what a life! Surrounded by the unwilling, the contemptuous, the unthinking. If this be (fair & balanced) pessimism, so be it.

[x City Journal]

Why History Has No End

by Victor Davis Hanson

Writing as the Berlin Wall fell in 1989, Francis Fukuyama famously announced the “End of History.” The world, he argued, was fast approaching the final stage of its political evolution. Western democratic capitalism had proved itself superior to all its historical rivals and now would find acceptance across the globe. Here were the communist regimes dropping into the dustbin of history, Fukuyama noted, while dictatorships and statist economies in Asia and South America were toppling too. A new world consumer class was evolving, leaving behind such retrograde notions as nationhood and national honor. As a result, war would grow rare or even vanish: what was there left to fight about? Gone, or going fast, was the old stuff of history—the mercurial, often larger-than-life men who sorted out on the battlefield the conflicts of traditions and values that once divided nations. Fukuyama acknowledged that the End of History would have a downside. Ennui would set in, as we sophisticated consumers became modern-day lotus-eaters, hooked on channel surfing and material comforts. But after the wars of the twentieth century, the prospect of peaceful, humdrum boredom seemed a pretty good deal.

How naive all this sounds today. Islamist hijackers crashing planes into the World Trade Center and the Pentagon, and the looming threat of worse terror outrages, have shown that a global embrace of the values of modern democracy is a distant hope, and anything but predetermined. Equally striking, it’s not just the West and the non-democratic world that are not converging; the West itself is pulling apart. Real differences between America and Europe about what kind of lives citizens can and should live not only persist but are growing wider.

A Fukuyaman might counter that September 11 was only a bump on the road to universal democracy, prosperity, and peace. Whether the Middle East’s mullahs and fascists know it or not, this argument would run, the budding spiritual and material desires of their masses for all things Western eventually will make them more like us—though how long this will take is unknown. It’s impossible ultimately to disprove such a long-range contention, of course. But look around. Fukuyama’s global village has seen a lot of old-fashioned ethnic, religious, and political violence since history’s purported end in 1989: Afghanistan, Algeria, Colombia, Iraq, Russia, Rwanda, Sudan, the former Yugoslavia, to name just a handful of flash points. Plato may have been right when he remarked in his Laws that peace, not war, is the exception in human affairs.

In fact, rather than bringing us all together, as Fukuyama predicted, the spread of English as the global lingua franca, of accessible, inexpensive high technology, and of universal fashion and communication has led to chaos as often as calm. These developments have incited envy, resentment, and anger among traditional societies. The men and women of these societies sense—as how could they not when encountering the image of Britney Spears gyrating or the subversive idea of free speech?—an affront to the power of the patriarch, mullah, or other hierarchical figure who demands respect based solely on religious dogma, gender, or class.

The new technologies, despite what Fukuyama would say, do not make modern liberal democrats out of our enemies but simply allow them to do their destructive work more effectively. Even though Islamists and other of globalization’s malcontents profess hatred for capitalist democracy, they don’t hesitate to use some of the West’s technological marvels against the West itself. How much easier it has become to plot and shoot and bomb and disrupt and incite with all those fancy gadgets! Flight simulators made it simpler for medieval-minded men, decked out in Nikes and fanny packs, to ram a kiloton or two of explosive power into the New York skyline. Both Usama and Saddam have employed modern mass media to cheer and spur the killing of infidels.

Leaving aside the mullahs and Arab despots, where is there much proof that freedom must follow in the train of affluence, as Fukuyama holds? Left-wing capitalists in China, their right-wing counterparts in Singapore, or their ex-KGB counterparts in Russia certainly do not assume that their throngs of consumers are natural democrats, and so far there’s little to say that they’re wrong. And where is the evidence that these consumers will inevitably become comfort-loving pacifists? Democracy and market reforms seem only to have emboldened India to confront Pakistan’s terrorist-spawning madrassa culture. Rich and free Japan is considering rearming rather than writing more checks to stop North Korean missiles from zooming through its airspace.

America, too, seems as subject to history as ever. Abandoning the belief that it’s always possible to keep thuggish regimes in check with words and bribes, it is returning to military activism, seeking to impose democracy—or at least some kind of decent government—on former terrorist-sponsoring nations, instead of waiting for the end of history somehow to make it spring up. Sure, postmodern, peroxide-topped Jasons and tongue-pierced Nicoles sulk at malls from coast to coast—bored, materialistic Fukuyamans all. But by contrast there are those American teens of the Third Mechanized Division, wearing their Ray-Bans and blaring rock, who rolled through Iraq like Patton’s Third Army reborn, pursuing George W. Bush’s vision of old-fashioned military victory, liberation, and nation building.

What about Europe? Surely there we can see Fukuyama’s post-historical future shaping up, in an increasingly hedonistic life-style that puts individual pleasure ahead of national pride or strong convictions, in a general embrace of pacifism, and in support for such multinational institutions as the United Nations, the International Criminal Court, and the European Union, which promise affluence and peace based on negotiation and consensus. Europeans say that sober reflection on their own checkered past has taught them to reject wars of the nation-state, to mediate, not deter, and to trust in Enlightenment rationality instead of primitive emotions surrounding God and country.

Look closer, though, and you’ll discover the pulse of history still beating beneath Europe’s postmodern surface—and beating stronger daily. During the cold war, it is important to remember, the looming threat of the Soviet Union kept in check the political ambitions and rivalries of Europe’s old nations. The half-century of peace from 1945 to 1989 was not so much a dividend of new attitudes as the result of the presence of a quarter-million American troops, who really did keep the Russians out and Germany’s military down. Facing a mutual foe armed with the most advanced weaponry, fielding an enormous army, embodying a proselytizing revolutionary ideology, and willing to shed the blood of 30 million of its own citizens did wonders to paper over less pressing differences. If France and Germany don’t stand as squarely with us in the War on Terror as they did in the cold war, it’s in part because al-Qaida and its rogue-nation supporters, menacing as they are, don’t threaten Europe’s capital cities with thousands of nuclear missiles, as did the Soviets.

With the evil empire’s collapse and America’s gradual withdrawal (the U.S. has closed 32 bases in Europe and reduced its troop presence there by 65 percent since a cold-war high), the European nations’ age-old drive for status, influence, and power has slowly started to reassert itself, increasing tensions on the Continent. German chancellor Gerhard Schröder proclaims “decisions will be made in Berlin,” French president Jacques Chirac shakes a finger at Poles and Rumanians for not following France’s leadership, and Italian and German politicians hurl schoolyard insults at one another. The Eastern Europeans, bordered by a reunified Germany and a nationalist Russia, wonder whether American guns might not provide better insurance than the European Union should the aggressive urges of historical enemies prove merely dormant and not extinct. It will be interesting to see whether all of Europe or just some of its historically more bellicose states will boost defense expenditures above the parsimonious European average of 0.5 percent of GNP.

Europe’s resurgent political ambitions and passions are even more apparent in the Continent’s relations with the U.S.—relations that, in the controversy over military action in Iraq, worsened to the point where France and Germany openly opposed and undermined their ally of a half-century.

Such behavior hardly squares with the End-of-History model, even though the Europeans were making post-historical complaints that the United States was acting like the Lone Ranger in threatening to go to war in Iraq without the approval of the “world community,” and even though the European position was that the diplomacy of the international community, working through the United Nations, could eventually have handled the Iraq problem without the terrible cost of war. But in truth, the European opposition to the U.S. over Iraq and the fuss the European nations made about international organizations and diplomacy had more to do with realpolitik—the desire of those nations to answer American influence and champion their own power—than they did with any belief in the obsolescence of national identity or military force. How else could the once-great nations of Europe counter American influence, given the present comparative weakness of their arms and the rigidities of their economies, than by shackling the “hyper-power” with the mandates of the “world community”?

Indeed, for all the self-righteously idealistic rhetoric that French foreign minister Dominique de Villepin spouted at the U.N. last spring, the real reason that he strong-armed the Third World nations to vote against the American push for military action in Iraq was to recapture a little French puissance on the cheap. (Recall that he penned a hagiography of that ultimate French nationalist, Napoleon.) With just one wee aircraft carrier, the Charles de Gaulle, in its national arsenal, France finds it wiser and more financially feasible—for now—to exert its clout through the U.N. than to build lots of new carriers to try to match the U.S. in military might.

The dustup between the old Europeans and the U.S. over Iraq, in which Europe’s historical national ambitions played an indisputable role, only widened an already existing transatlantic rift, the product of substantial historical, cultural, and political differences between Europe’s democracies and our own. Resentment of the U.S. runs deep on the Continent. In part, this is a psychological residue of World War II. With nations, as with people, no good deed goes unpunished. France would not exist today without Normandy Beach—a permanent blow to its self-esteem. American arms both destroyed Germany and helped make it the flourishing nation it is today. Should Germans hate us or thank us for saving them from themselves? Both France and Germany would have become a playground for Russian soldiers and tanks if not for the U.S.’s military presence in NATO, and for proud European nations to become so dependent must surely rankle. Since the end of the cold war, it has become harder for European nations to keep this wounded pride in check—though of course the resentments have been intertwined with genuine gratitude and friendship toward America.

European animosity toward the U.S. also has a snobbish component—an anti-bourgeois disdain that is the dual legacy of Europe’s socialist Left and ancien régime Right. Notice how the latest “nuanced” European criticisms of America often start out on the Left—we’re too hegemonic and don’t care about the aspirations of poor countries—and then, in a blink of an eye, they veer to the aristocratic Right: we’re a motley sort, promoting vulgar food and mass entertainment to corrupt the tastes of nations that have a much more refined tradition. That Europeans now eat at McDonald’s and love Hollywood trash—that’s simply the result of American corporate brainwashing.

Our old-fashioned belief in right and wrong along with our willingness to act on that belief also infuriates the Europeans. Americans have an ingrained distrust of moral laxity masquerading as “sophistication,” and our dissident religious heritage has made us comfortable with making clear-cut moral choices in politics—“simplistic” choices, Euros would say. It is precisely because we recognize the existence of evil, pure and simple, that we feel justified in using force to strip power from ogres like Mullah Omar and Saddam Hussein—or kill them, like Uday and Qusay Hussein. Europeans, cynical in politics and morals, think that this attitude makes us loose cannons.

But paradoxically, the most consequential reason Continental Europe and America are pulling apart is the European Union itself. European visionaries have had a long history of dreaming up and seeking to implement nationalist or socialist utopias—schemes, doomed to fail, that have trampled individuals under the heavy boot of the state as the price of creating a “new man” and a perfect world, bringing history to fulfillment. The murderous fraternity of the French Revolution, nineteenth-century Bonapartism, Marxism and modern communism, Francoism, Italian fascism, Nazism—all these coercive programs for remaking the world sprang from what seems an ineradicable Continental impulse.

The European Union, benign as it currently seems, is the latest manifestation of this utopian spirit. The E.U.’s greatest hubris is to imagine that it can completely overcome the historical allegiances and political cultures of Europe’s many nations by creating a “European” man, freed entirely from local attachments and resentments, conflicting interests, ethnicity, and differing visions of the good life, and wedded instead to rationality, egalitarianism, secularism, and the enlightened rule of wise bureaucrats. No less utopian is the E.U.’s assumption, contrary to all economic reason, that a 35-hour workweek, retirement at 55, ever-longer vacations, extensive welfare benefits, and massive economic regulation can go together with swelling prosperity. All that makes this squaring of the circle plausible even in the short term is Europe’s choice to spend little on defense, which allows more money to go to welfare programs—a choice itself resting on another utopian assumption: that the world has entered a new era in which disagreements between nations can be resolved peacefully, through the guidance and pressure of international organizations—above all, the United Nations. In reality, of course, Europe relies on the United States to take care of many of its defense needs.

Like Europe’s brave new worlds of the past, the E.U. is in fact a deeply anti-democratic mechanism that elites can use to grab power while mouthing platitudes about “brotherhood,” designed to appeal to the citizen’s desire to participate in some kind of higher vision. The E.U.’s transnational government has nothing in place to ensure an institutional opposition—no bicameral legislature, no independent review by high courts, no veto power for individual member states. This authoritarian arrangement allows the E.U. to rule by diktat rather than by consensus and review. Rural Montanans can complain to their congressmen that Washington is out of touch; to whom will Estonians complain that Brussels has no right to decide what goes on their restaurant menus?

The E.U.’s disdain for democracy on the Continent carries over to its relations with the rest of the world. Even as Jews duck Muslim mobs in French cities, E.U. apparatchiks can slur democratic Israel as fascist, and Berlin and Paris can triangulate with Iran’s mullocracy. Only contempt for the messy give-and-take of democracy, plus a nasty dose of anti-Semitism, can explain why the despotic Arafat is more popular than the democratic Sharon among E.U. elites.

As for the U.S.—the democratic nation par excellence (so to speak)—the E.U. is contemptuous, so much so that anti-Americanism often seems to be the union’s founding principle. The E.U. channels all the wounded pride, resentment, and snobbish scorn that Europeans feel toward Americans into its grand ambition to stand up to the U.S. on the world stage. Regardless of whether Bush or Clinton is in charge, we hear boos from Athens to Berlin. Was it Durban or Kyoto that angered Europeans? Or maybe it was the execution of Timothy McVeigh, John Ashcroft’s hunt for terrorists, the lack of good cuisine at Guantanamo, or the failure to find WMDs?

It’s understandable, really, that the E.U. has set itself against America. Nothing is more foreign to European statist utopianism than the American emphasis on individual liberty, local self-government, equality under the law, and slow, imperfect reform. America has always been immune to utopian fantasies—indeed, it has always opposed them. The skeptical Founding Fathers, influenced by the prudence and love of liberty of the British Enlightenment, built the American republic based on the anti-utopian belief that men are fallible and self-interested, love their property, and can best manage their affairs locally. The Founders saw the café theorizing of Continental elites and French philosophers as a danger to good government, which requires not some grand, all-encompassing blueprint but rather institutional checks and balances and a citizenry of perennially vigilant individual citizens.

From America’s very beginnings in the wilderness of the New World, that spirit of rugged individualism and self-reliance has found a home here, and it stands diametrically opposed to European collectivism in all its forms—from the organic, hierarchical community extolled by the old European Right to the socialist commune on the Left to the E.U.’s rationalist super-state. Of course, our self-reliant ethos sometimes can seem less than fraternal, as I can attest from personal experience. I farm, among other things, raisins. Recently, the price of raisins crashed below its level of some 40 years ago. My friends casually suggested that I pack it in, uproot an ancestral vineyard, move on to something else—not, as in Europe, circle the tractor around the capitol, block traffic, or seek government protection and subsidies as a representative of a hallowed way of life under threat from globalization. But who can plausibly deny that America’s astounding dynamism and productivity result from this deep-seated belief that individual men and women are responsible for their own destinies and have no birthright from the state to be affluent?

The growing split between the U.S. and Europe that has resulted from these trends is of seismic importance. Though the effort to create a “European Union” may offer superficial relief when one considers Europe’s bloody history, it in fact constitutes a potential long-term threat to the U.S. and to the world. To the extent that this project succeeds in forging a common European identity, anti-Americanism will likely be its lodestar. But of course, it ultimately will fail, because for most people being a European could never be as meaningful, have such rich cultural and historical resonance, as being a Frenchman or a German. And even in failure, the project could be catastrophic: by denigrating a healthy and natural sense of nationhood, the E.U. risks unleashing a militaristic chauvinism in some of its member states—threatening not only the U.S. but Europe as well. When a German chancellor wins reelection campaigning as an anti-American and trumpeting a new “German way,” it’s not hard to see behind his success an embittered populace, motivated by the belief that German cultural energy and economic prosperity (increasingly encumbered by the corporate welfare statism that is the real “German way”) don’t win sufficient status on the world stage, where military might counts the most.

Behind the pretense that a dash of multinationalism and pacifist platitudes have suddenly transformed Europe into some new Fukuyama-type End-of-History society, it is still mostly the continent of old, torn by envy and pride, conjuring up utopian fantasies of pan-European rule at the same time as nationalist resentments fester. That’s what makes the question of European rearmament so crucial. Should Europe rearm—and I think it will, either collectively or nation by nation, as America reduces its military presence—it has the population, economic power, and (most important) the know-how to field forces as good as our own. If Germany invested 4 to 5 percent of its GNP in defense, its new Luftwaffe would not resemble Syria’s air force. Two or three French aircraft carriers—snickers about the petite Charles de Gaulle aside—could destroy the combined navies of the Middle East. We may laugh today at the unionized Belgian military of potbellied cooks and barbers, or scoff at German pacifism, but this is still Europe, which gave birth to the Western military tradition—the most lethal the world has ever known.

How a re-militarized Europe views the United States is therefore important. A powerful, well-adjusted Europe, made up of nations that would curb arms sales to rogue regimes, fight shoulder to shoulder with us against Islamic terror, warn North Korea, and stop funneling money to Arafat’s Palestinian Authority would make the world a better place. An armed Europe of renascent nationalisms, or one pursuing the creation of a transnational continental super-state, could prove our greatest bane since 1941. That Europe is now militarily weak and hostile does not mean that it will not soon be either powerful and friendly—or powerful and hostile.

These emerging trends require the United States to rethink its relations with Europe. NATO’s American architects rightly believed that the organization they created not only would protect Europeans from Russians and Europeans from one another, but shield us from them as well. We need comparable hardheadedness in thinking of what steps we should take to improve relations with Europe—and protect ourselves at the same time.

Completing the removal of most of our troops from Western Europe is a good first move. It would lessen the Europeans’ sense of impotence and thus diminish envy and encourage maturity. (Leaving a few bases in Britain, Italy, and Spain will allow us to retain some vital transportation and communication facilities—and have monitoring stations in place to gauge the tempo of the inevitable rearming.) We can redeploy the freed-up troops in strategically vital areas of the Middle East.

Second, there’s nothing wrong with bilateralism, so we should treat the E.U. the way we treat Yasser Arafat: smile, and deal with someone else. We should seek good relations and coalitions with willing European states, presuming that we can be friendly with many, even though we are not friendly with all. By offering an alternative to European nations that might worry about the E.U.’s anti-democratic tendencies, we can perhaps limit the union’s worst behavior.

Finally, if the Europeans insist on empowering unaccountable international organizations like the U.N., we should at least try to make them more accountable and reflective of political realities, making it harder for the Europeans to use the mask of internationalism to pursue their own power-political ends. The permanent members of the U.N. Security Council, for example, should include India and Japan, countries that by all measures of population, economic power, and military potential warrant parity with current permanent member France. If the General Assembly is always going on about democracy and human rights, moreover, why not put constant pressure on some of its more benighted members to extend human and democratic rights to their own peoples?

A tough-minded stance toward Europe will accept that a Germany or a France will not always be our ally; given our respective histories and differing views of the state, we should not be surprised to meet with hostility—and when it comes, we should recognize that it may have more to do with what we represent as a nation than any particular policies that we have pursued. The West has experienced similar intramural conflict throughout its history: between Catholic, Orthodox, and Protestant Christians; between Atlantic-port New Worlders and Mediterranean galley states; between the French and the British as they triangulated with the Ottomans—to say nothing of the mad continental utopians, warring on the free societies of Europe and the world.

Ultimately, America seeks neither a hostile nor a subservient Europe, but one of confident democratic allies like the U.K.: allies that provide us not only with military partnership but trustworthy guidance too. The U.N. has never really either prevented or ended a war; our democratic friends in World War I and II, along with NATO, sometimes have. We stand a better chance of bringing about such a future if we remember from history that man’s nature, for all the centuries’ talk about human perfectibility, is unchanging—and that therefore history never ends.

Copyright © 2003 The Manhattan Institute

Thursday, October 30, 2003

Iraqification?

Mission Completed. Baghdad Bob. Blame the ingeniousadvance men. (Sneer that none of the advance men can be called ingenious; this, from the personification of verbal inepitude.) Can anyone spell D-I-S-I-N-G-E-N-U-O-U-S? Can anyone spell D-I-S-A-S-T-R-O-U-S? Up is down. In is out. Black is white. George Orwell was right about language and the abuse of power. Soon, the NASCAR Dads won't know the difference between left and right. I think—in the NACSAR world—everything goes left (except at the ballot box). If this be (fair & balanced) fear & loathing, so be it.

[x NYTimes]

Eyes Wide Shut

By MAUREEN DOWD

WASHINGTON — In the thick of the war with Iraq, President Bush used to pop out of meetings to catch the Iraqi information minister slipcovering grim reality with willful, idiotic optimism.

"He's my man," Mr. Bush laughingly told Tom Brokaw about the entertaining contortions of Muhammad Said al-Sahhaf, a k a "Comical Ali" and "Baghdad Bob," who assured reporters, even as American tanks rumbled in, "There are no American infidels in Baghdad. Never!" and, "We are winning this war, and we will win the war. . . . This is for sure."

Now Crawford George has morphed into Baghdad Bob.

Speaking to reporters this week, Mr. Bush made the bizarre argument that the worse things get in Iraq, the better news it is. "The more successful we are on the ground, the more these killers will react," he said.

In the Panglossian Potomac, calamities happen for the best. One could almost hear the doubletalk echo of that American officer in Vietnam who said: "It was necessary to destroy the village in order to save it."

The war began with Bush illogic: false intelligence (from Niger to nuclear) used to bolster a false casus belli (imminent threat to our security) based on a quartet of false premises (that we could easily finish off Saddam and the Baathists, scare the terrorists and democratize Iraq without leeching our economy).

Now Bush illogic continues: The more Americans, Iraqis and aid workers who get killed and wounded, the more it is a sign of American progress. The more dangerous Iraq is, the safer the world is. The more troops we seem to need in Iraq, the less we need to send more troops.

The harder it is to find Saddam, Osama and W.M.D., the less they mattered anyhow. The more coordinated, intense and sophisticated the attacks on our soldiers grow, the more "desperate" the enemy is.

In a briefing piped into the Pentagon on Monday from Tikrit, Maj. Gen. Raymond Odierno called the insurgents "desperate" eight times. But it is Bush officials who seem desperate when they curtain off reality. They don't even understand the political utility of truth.

After admitting recently that Saddam had no connection to 9/11, the president pounded his finger on his lectern on Tuesday, while vowing to stay in Iraq, and said, "We must never forget the lessons of Sept. 11."

Mr. Bush looked buck-passy when he denied that the White House, which throws up PowerPoint slogans behind his head on TV, was behind the "Mission Accomplished" banner. And Donald Rumsfeld looked duplicitous when he acknowledged in a private memo, after brusquely upbeat public briefings, that America was in for a "long, hard slog" in Iraq and Afghanistan.

No juxtaposition is too absurd to stop Bush officials from insisting nothing is wrong. Car bombs and a blitz of air-to-ground missiles turned Iraq into a hideous tangle of ambulances, stretchers and dead bodies, just after Paul Wolfowitz arrived there to showcase successes.

But the fear of young American soldiers who don't speak the language or understand the culture, who don't know who's going to shoot at them, was captured in a front-page picture in yesterday's Times: two soldiers leaning down to search the pockets of one small Iraqi boy.

Mr. Bush, staring at the campaign hourglass, has ordered that the "Iraqification" of security be speeded up, so Iraqi cannon fodder can replace American sitting ducks. But Iraqification won't work any better than Vietnamization unless the Bush crowd stops spinning.

Neil Sheehan, the Pulitzer Prize-winning author of "A Bright Shining Lie," recalls Robert McNamara making Wolfowitz-like trips to Vietnam, spotlighting good news, yearning to pretend insecure areas were secure.

"McNamara was in a jeep in the Mekong Delta with an old Army colonel from Texas named Dan Porter," Mr. Sheehan told me. "Porter told him, `Mr. Secretary, we've got serious problems here that you're not getting. You ought to know what they are.' And McNamara replied: `I don't want to hear about your problems. I want to hear about your progress.' "

"If you want to be hoodwinked," Mr. Sheehan concludes, "it's easy."

Copyright © 2003 The New York Times Company

Wednesday, October 29, 2003

Iraq Is Vietnam On Crack Cocaine

I heard two experts on military strategy tonight on NPR's All Things Considered. Robert Siegal interviewed a retired general (former commandant of the Army War College) and a retired Special Forces captain/retired CIA operative about the current mess in Iraq. Both denied that the U. S. is in a quagmire (a 'la Vietnam). Both denied that the current wave of violence is the Iraqi equivalent of Tet. Finally, both upheld—as the solution to the Iraqi problem—the creation of a new Iraqi army. Can anyone spell V-I-E-T-N-A-M-I-Z-A-T-I-O-N? If this be (fair & balanced) skepticism, so be it!

[x HNN]

When Soldiers Refuse to Soldier On

by

Ruth Rosen

PRESIDENT BUSH is busily trying to convince Americans that the war in Iraq is a phenomenal success. Meanwhile, a recent survey conducted by the military newspaper Stars and Stripes found that half of the troops described their unit's morale as low and a third complained that their mission had little or no value. Many viewed themselves as sitting ducks, rather than soldiers engaged in war.

Last year, I heard a historian describe the Iraq war as Vietnam on crack cocaine. It was an apt comment. It took years, not months, before large numbers of civilians and soldiers questioned the sanity and cost of that war.

This time, the anti-war movement started before the invasion of Iraq. It may not be long before GIs refuse to follow orders or ask for discharges based on their conscientious objection to the occupation.

Many groups are supporting such dissatisfaction among the troops, including Veterans for Peace and Veterans for Common Sense. Some of the most anguished opposition appears on Web sites created by "Military Families Speak Out" and "Bring Them Home Now."

Also drawing attention is an "Open Letter to Soldiers Who are Involved in the Occupation of Iraq" posted Sept. 19 on the Internet by two men who know about refusing military orders.

James Skelly, now a senior fellow at the Baker Institute for Peace Conflict Studies at Juniata College in Pennsylvania, was a lieutenant in the Navy during the '60s. Rather than serve in Vietnam, he applied for a discharge based on his conscientious objection to the war. When the Pentagon refused his application, he sued Defense Secretary Melvin Laird for being illegally held by the military and became a West Coast founder of the Concerned Officer Movement.

Guy Grossman is a graduate student in philosophy at Tel-Aviv University who serves as a second lieutenant in the Israeli reserve forces. He is one of the founders of "Courage to Refuse," a group of more than 500 soldiers who have refused to serve in the Occupied Territories for conscientious reasons.

"We write this letter," the two begin, "because we have both been military officers during conflicts that descended into a moral abyss and from which we struggled to emerge with our humanity intact." Addressing the terror and moral anguish faced by soldiers who cannot distinguish friend from foe, they write, "From time to time . . . some of you may want to take revenge for the deaths of your fellow soldiers." But they urge soldiers "to step back from such sentiments because the lives of innocent people will be placed at further risk, and your very humanity itself will be threatened."

They explain how soldiers can legally express their moral objections, but also warn of the physical dangers and social consequences that can result from open opposition to the occupation.

Knowing the cost of war to the human heart, they end with these words: "Regardless of what you decide, it is our fervent desire that. . . you ultimately return to your homes with your humanity enriched, rather than diminished."

Reached in Denmark, Skelly told me that although soldiers have not yet responded to the letter, some family members have replied quite favorably. One mother thanked him and wrote, "We are passing it around and surely many copies will find their way into Iraq." The sister of a Navy weapons officer explained why, after nine years in the service, her brother resigned his commission after his application for conscientious objection was denied. "Because of all the reasons you describe; I hope some soldiers will hear you and Guy Grossman -- and their own consciences."

"These families know their loved ones are fodder," said Skelly. The question is: What will happen when soldiers say the same thing?

Ruth Rosen is a columnist for the San Francisco Chronicle and former Professor of History at the University of California - Davis.

Copyright © 2003 History News Network

See, I Can Post A Rant Without Bashing W!

Wisconsin's Tom Terrific sent along this nice piece of historiana. The mantra of all historians is a concern with change over time. Consider what has happened since 1903. See, I can post to the blog without bashing W or ranting about the war in Iraq. I am not a simple person. If this is (fair & balanced) self-delusion, so be it!

Bob Hope just died at the age of 100. It's time to revisit where we were a century ago. This ought to boggle your mind .

YEAR OF 1903

The year is 1903, one hundred years ago... what a difference a century makes. Here are the U. S. statistics for 1903....

The average life expectancy in the US was 47.

Only 14% of the homes in the US had a BATHTUB.

Only 8% of the homes had a TELEPHONE.

A three-minute call from Denver to New York City cost $11.

There were only 8,000 CARS in the US and only 144 miles of paved ROADS.

The maximum speed limit in most cities was 10 mph.

Alabama, Mississippi, Iowa, and Tennessee were each more heavily populated than California. With a mere 1.4 million residents, California was only the 21st most populous state in the Union.

The tallest structure in the world was the Eiffel Tower.

The average wage in the US was $0.22/hour.

The average US worker made between $200-$400/year.

A competent accountant could expect to earn $2000/year, a dentist $2,500/year, a veterinarian between $1,500-$4,000/year, and a mechanical engineer about $5,000/year.

More than 95% of all BIRTHS in the US took place at HOME.

90% of all US physicians had NO COLLEGE education. Instead, they attended medical schools, many of which were condemned in the press and by the government as "substandard."

Sugar cost $0.04/pound. Eggs were $0.14/dozen. Coffee cost $0.15/pound.

Most women only washed their HAIR once a month and used BORAX or EGG YOLKS for shampoo.

Canada passed a law prohibiting POOR people from entering the country for any reason.

The five leading causes of death in the US were:

The American flag had 45 stars. Arizona, Oklahoma, New Mexico, Hawaii & Alaska hadn't been admitted to the Union yet.

The population of Las Vegas, Nevada was 30.

Crossword puzzles, canned beer, and iced tea hadn't been invented.

There were no Mother's Day or Father's Day.

One in ten US adults couldn't read or write. Only 6% of all Americans had graduated from HIGH SCHOOL.

Coca Cola contained cocaine. Marijuana, heroin & morphine were all available over the counter at corner drugstores. According to one pharmacist, "Heroin clears the complexion, gives buoyancy to the mind, regulates the stomach and the bowels, and is, in fact, a perfect guardian of health."

18% of households in the US had at least one full-time SERVANT or domestic.

There were only about 230 reported MURDERS in the entire US.

Just think what it will be like in another 100 years. It boggles the mind...

At Last, An Intelligent Discussion of Darwinian Theory

At Amarillo College (evidently), we don't discuss evolutionary theory in the biological sciences. According to the chief academic officer, there is more important material to discuss in the College's biology courses. This was echoed by the closet Creationist chair of the department. Five (5) junior faculty in the Department of Biological Sciences refused to comment to the campus newspaper last spring on this issue of evolutionary theory in Amarillo College biology classrooms. Were they struck dumb? Or, were they fearful of repercussions for stating their own views; the matter of tenure uppermost in their minds? If this be (fair & balanced) heresy, make the most of it.

[x The Spectator]

The mystery of the missing links

It is becoming fashionable to question Darwinism, but few people understand either the arguments for evolution or the arguments against it. Mary Wakefield explains the thinking on both sides

by Mary Wakefield

A few weeks ago I was talking to a friend, a man who has more postgraduate degrees than I have GCSEs. The subject of Darwinism came up. ‘Actually,’ he said, raising his eyebrows, ‘I don’t believe in evolution.’

I reacted with incredulity: ‘Don’t be so bloody daft.’

‘I’m not,’ he said. ‘Many scientists admit that the theory of evolution is in trouble these days. There are too many things it can’t explain.’

‘Like what?’

‘The gap in the fossil record.’

‘Oh, that old chestnut!’ My desire to scorn was impeded only by a gap in my knowledge more glaring than that in the fossil record itself.

Last Saturday at breakfast with my flatmates, there was a pause in conversation. ‘Hands up anyone who has doubts about Darwinism,’ I said. To my surprise all three — a teacher, a music agent and a playwright — slowly raised their arms. One had read a book about the inadequacies of Darwin — Michael Denton’s Evolution: A Theory in Crisis; another, a Christian, thought that Genesis was still the best explanation for the universe. The playwright blamed the doctrine of survival of the fittest for ‘capitalist misery and the oppression of the people’. Nearly 150 years after the publication of Charles Darwin’s Origin of Species, a taboo seems to be lifting.

Until recently, to question Darwinism was to admit to being either a religious nut or just plain thick. ‘Darwin’s theory is no longer a theory but a fact,’ said Julian Huxley in 1959. For most of the late 20th century Darwinism has seemed indubitable, even to those who have as little real understanding of the theory as they do of setting the video-timer. I remember a recent conversation with my mother: ‘Do you believe in evolution, Mum?’ ‘Of course I do, darling. If you use your thumbs a lot, you will have children with big thumbs. If they use their thumbs a lot, and so do their children, then eventually there will be a new sort of person with big thumbs.’

The whole point of natural selection is that it denies that acquired characteristics can be inherited. According to modern Darwinism, new species are created by a purposeless, random process of genetic mutation. If keen Darwinians such as my mother can get it wrong, it is perhaps not surprising that the theory is under attack.

The current confusion is the result of a decade of campaigning by a group of Christian academics who work for a think-tank called the Discovery Institute in Seattle. Their guiding principle — which they call Intelligent Design theory or ID — is a sophisticated version of St Thomas Aquinas’ Argument from Design.

Over the last few years they have had a staggering impact. Just a few weeks ago, they persuaded an American publisher of biology textbooks to add a paragraph encouraging students to analyse theories other than Darwinism. Over the past two years they have convinced the boards of education in Ohio, Michigan, West Virginia and Georgia to teach children about Intelligent Design. Indiana and Texas are keen to follow suit. They sponsor debates, set up research fellowships, publish books, distribute flyers and badges, and conduct polls, the latest of which shows that 71 per cent of adult Americans think that the evidence against Darwin should be taught in schools.

Unlike the swivel-eyed creationists, ID supporters are very keen on scientific evidence. They accept that the earth was not created in six days, and is billions of years old. They also concede Darwin’s theory of microevolution: that species may, over time, adapt to suit their environments. What Intelligent Design advocates deny is macroevolution: the idea that all life emerged from some common ancestor slowly wriggling around in primordial soup. If you study the biological world with an open mind, they say, you will see more evidence that each separate species was created by an Intelligent Designer. The most prominent members of the ID movement are Michael Behe the biochemist, and Phillip E. Johnson, professor of law at the University of California. They share a belief that it is impossible for small, incremental changes to have created the amazing diversity of life. There is no way that every organism could have been created by blind chance, they say. The ‘fine-tuning’ of the universe indicates a creator.

Behe attacks Darwinism in his 1996 book, Darwin’s Black Box: the Biochemical Challenge to Evolution. If you look inside cells, Behe says, you see that they are like wonderfully intricate little machines. Each part is so precisely engineered that if you were to remove or alter a single part, the whole thing would grind to a halt. The cell has irreducible complexity; we cannot conceive of it functioning in a less developed state. How then, asks Behe, could a cell have developed through a series of random adaptations?

Then there is the arsenal of arguments about the fossil record, of which the most forceful is that evolutionists have not found the fossils of any transitional species — half reptile and half bird, for instance. Similarly, there are no rich fossil deposits before the Cambrian era about 550 million years ago. If Darwin was right, what happened to the fossils of all their evolutionary predecessors?

Phillip E. Johnson, author of Darwin on Trial, hopes that these arguments will serve as a ‘wedge’, opening up science teaching to discussions about God. Evolution is unscientific, he says, because it is not testable or falsifiable; it makes claims about events (such as the very beginning of life on earth) that can never be recreated. ‘In good time new theories will emerge and science will change,’ he writes. ‘Maybe there will be a new theory of evolution, but it is also possible that the basic concept will collapse and science will acknowledge that those elusive common ancestors of the major biological groups never existed.’

If Johnson is right, then God, or a designer, deposited each new species on the planet, fully formed and marked ‘made in heaven’. This is not a very modern-sounding idea, but one whose supporters write articles in respectable magazines and use phrases such as ‘Cambrian explosion’ and ‘irreducible complexity’. Few of us then (including, I suspect, the boards that approve American biology textbooks) would be confident enough to question it. Especially intimidating for scientific ignoramuses is the Discovery Institute’s list of 100 scientists, including Nobel prize nominees, who doubt that random mutation and natural selection can account for the complexity of life.

Professor Richard Dawkins sent me his rather different opinion of the ID movement: ‘Imagine,’ he wrote, ‘that there is a well-organised and well-financed group of nutters, implacably convinced that the Roman Empire never existed. Hadrian’s Wall, Verulamium, Pompeii — Rome itself — are all planted fakes. The Latin language, for all its rich literature and its Romance language grandchildren, is a Victorian fabrication. The Rome deniers are, no doubt, harmless wingnuts, more harmless than the Holocaust deniers whom they resemble. Smile and be tolerant, just as we smile at the Flat Earth Society. But your tolerance might wear thin if you happen to be a lifelong scholar and teacher of Roman history, language or literature. You suddenly find yourself obliged to interrupt your magnum opus on the Odes of Horace in order to devote time and effort to rebutting a well-financed propaganda campaign claiming that the entire classical world that you love never existed.’

So are all Intelligent Design supporters fantasists and idiots, just wasting the time of proper scientists and deluding the general public? If Dawkins is to be believed, the neo-Darwinists have come up with satisfactory answers to all the conundrums posed by ID proponents.

In response to Michael Behe, the Darwinists point out that although an organism may look essential and irreducible, many of its component parts can serve multiple functions. For instance, the blood-clotting mechanism that Behe cites as an example of an irreducibly complex system seems, on close inspection, to involve the modification of proteins that were originally used in digestion.

Matt Ridley, the science writer, kindly explained the lack of fossils before the Cambrian explosion: ‘Easy. There were no hard body parts before then. Why? Probably because there were few mobile predators, and so few jaws and few eyes. There are in fact lots of Precambrian fossils, but they are mostly microbial fossils, which are microscopic and boring.’

Likewise, palaeontologists say that they do know of some examples of fossils intermediate in form between the various taxonomic groups. The half-dinosaur, half-bird archaeopteryx, for instance, which combines feathers and skeletal structures peculiar to birds with features of dinosaurs.

‘Huh,’ say the Intelligent Designers, who do not accept poor old archaeopteryx as a transitory species at all. For them, he is just an extinct sort of bird that happened to look a bit like a reptile.

It would be fair to say that the ID lobby has done us a favour in drawing attention to some serious problems, and perhaps breaking the stranglehold of atheistic neo-Darwinism; but their credibility is damaged by the fact that scientists are finding new evidence every day to support the theory of macroevolution. There is also something a little unnerving about the way in which the ID movement is funded. Most of the Discovery Institute’s $4 million annual budget comes from evangelical Christian organisations. One important donor is the Ahmanson family, who have a long-standing affiliation to Christian Reconstructionism, an extreme faction of the religious Right that wants to replace American democracy with a fundamentalist theocracy.

There is a more metaphysical problem for Intelligent Design. If we accept a lack of scientific evidence as proof of a creator’s existence, then surely we must regard every subsequent relevant scientific discovery, each new Precambrian fossil, as an argument against the existence of God.

The debate has anyway been confused by the vitriol each side pours on the other. Phillip Johnson calls Dawkins a ‘blusterer’ who has been ‘highly honoured by scientific establishments for promoting materialism in the name of science’. Dawkins retorts that religion ‘is a kind of organised misconception. It is millions of people being systematically educated in error, told falsehoods by people who command respect.’

Perhaps the answer is that the whole battle could have been avoided if Darwinism had not been put forward as proof of the non-existence of God. As Kenneth Miller, a Darwinian scientist and a Christian, says in his book Finding Darwin’s God, ‘Evolution may explain the existence of our most basic biological drives and desires but that does not tell us that it is always proper to act on them.... Those who ask from science a final argument, an ultimate proof, an unassailable position from which the issue of God may be decided will always be disappointed. As a scientist I claim no new proofs, no revolutionary data, no stunning insight into nature that can tip the balance in one direction or another. But I do claim that to a believer, even in the most traditional sense, evolutionary biology is not at all the obstacle we often believe it to be. In many respects evolution is the key to understanding our relationship with God.’

St Basil, the 4th century Archbishop of Caesarea in Cappadocia, said much the same thing: ‘Why do the waters give birth also to birds?’ he asked, writing about Genesis. ‘Because there is, so to say, a family link between the creatures that fly and those that swim. In the same way that fish cut the waters, using their fins to carry them forward, so we see the birds float in the air by the help of their wings.’ If an Archbishop living 1,400 years before Darwin can reconcile God with evolution, then perhaps Dawkins and the ID lobby should be persuaded to do so as well.

Copyright © 2003 The Spectator

Tuesday, October 28, 2003

But, What About W?

Bummer. This guy predicted Clinton's impeachment and W's tax cuts. What about the election of 2004? How about Iraq? Can anyone spell T-E-T? I heard an All Things Considered broadcast yesterday on NPR. The interviewee (retired general) was calling for a re-invasion of the Sunni Triangle. Do we get do-overs? W proclaimed that the multiple car bombings yesterday were the work of extrememists! Duh! If this be (fair & balanced) wackiness, so be it.

[x Chronicle of Higher Education]

An Overlooked Theory on Presidential Politics

By RICK VALELLY

A little-discussed theory of the American presidency has had startling, if unnoticed, success as a crystal ball. Stephen Skowronek, a chaired professor at Yale, is the author of The Politics Presidents Make: Leadership From John Adams to Bill Clinton (Harvard University Press, 1997), which is a revision of his 1993 book with the same main title but a slightly different subtitle, Leadership From John Adams to George Bush. The books have foretold such major events as Bill Clinton's impeachment and George W. Bush's tax cuts, as well as his drive for a second gulf war.

At recent conferences on the presidency in Princeton, N.J., and London, there was little mention of the theory or Skowronek, nor were there round tables or panels about his work at the annual meeting of the American Political Science Association in Philadelphia over Labor Day weekend. A survey of 930 or so hits on the Internet for Skowronek's name indicates that no one seems to be commenting on his predictive achievements. Instead, a previous book on the origins and evolution of federal bureaucracies is widely cited.

The quiet over Skowronek's presidency books is ironic -- and also rather troubling. It is ironic because political scientists say that we want our discipline to become a predictive science of political behavior, yet we seem to have overlooked one of those rare instances of apparent success. It is troubling because it suggests that stereotypes about what constitutes predictive science may be clouding our vision. Skowronek's theory is historical and qualitative -- it doesn't have the right "look" for such science.

In aspiring to predictive power, political science has become sharply formal and analytic, innovatively adapting aspects of microeconomics, psychology, and the mathematics of social choice and game theory. Despite the strong social and professional cues to be on the lookout for working predictive theories, the continuing accomplishment of Skowronek's approach to understanding the presidency has gone unremarked.

To be sure, Skowronek never said in print that Bill Clinton would be impeached. But reading his book before the event, a clever reader (though, alas, I was not that reader) would have seen that the book's typology of presidents clearly implied such a crisis -- the second instance of impeachment in American history. Also, even as George W. Bush took the oath of office from the chief justice, Skowronek's theory foretold several salient features of the current administration. The theory suggested we should look for Bush to be treated more deferentially than his predecessor by both the news media and the political opposition. Expect Bush to replicate the massive, signature tax cuts of Ronald Reagan, his philosophical mentor. Expect, too, that Bush will press for a war somewhere. Finally, be prepared for the possibility that in agitating for war Bush will run the risk of misleading the public and Congress about the rationale.

The predictive strengths of Skowronek's work are rooted in a brilliantly simple scheme for classifying presidents and their impact on American politics. The framework assumes two things.

First, presidents are elected into the most powerful office in America against the backdrop of what Skowronek calls a "regime." In other words, they don't start history anew the day they walk into the Oval Office. A lot of their job has been defined beforehand.

Regimes comprise a particular public philosophy, like the New Deal's emphasis on government intervention on behalf of citizens. Another element of a regime is the mix of policies that further its public philosophy. And a regime contains a "carrier party," a party energized and renewed by introducing the new program. After the initial excitement of the regime's first presidential leader (for instance, Franklin Roosevelt or Ronald Reagan), the party becomes the vehicle for ideas with which everyone has had some experience -- say, the Medicare amendment of the Social Security Act, or the tax cuts under the current administration. Late in the life of a regime, the once-dominant but actually quite brittle governing party stands for worn-out ideas.

Skowronek suggests that there have been five such regimes: The first was inaugurated by Thomas Jefferson, the next by Andrew Jackson, the third by Abraham Lincoln, the fourth by Franklin Roosevelt, and the most recent by Ronald Reagan.

Skowronek's second simple assumption has to do with a president's "regime affiliation." Affiliation with or repudiation of the regime comes from the incoming president's party identity. New presidents from a party that has been out of power can and will repudiate the rival party's regime if it appears bankrupt. Presidents inaugurated under those circumstances call the country to a new covenant or a new deal. Recall Ronald Reagan's famous subversion of a '60s catchphrase when he declared, in his first inaugural address: "In this present crisis, government is not the solution but the problem. ... It is no coincidence that our present troubles parallel and are proportionate to the intervention and intrusion in our lives that result from unnecessary and excessive growth of government. ... So, with all the creative energy at our command, let us begin an era of national renewal." Even if he is not particularly successful in realizing the new public philosophy to which he has pinned his colors, this kind of a repudiate-and-renew president is widely hailed as a great innovator.

Alternatively, an incoming president might affiliate with an existing, strong regime but seek to improve it. Think here of Lyndon Johnson, whose career began during the later phases of the New Deal. As president he pushed for Medicare, the Great Society, and the War on Poverty. He expressed his filial piety by signing Medicare, an amendment to the Social Security Act of 1935, in the presence of Harry Truman. Think, too, of George W. Bush, who has won Reaganesque tax cuts that -- together with increases in military spending -- are likely to force a great reduction in government social-policy obligations, thus furthering Reagan's dream of ending individual dependence on government. In essence, then, a president strongly affiliated with a regime is a defender of the faith.

Paradoxically, though, such presidents can break their party in two, or at least preside over serious factional disagreements. Skowronek sketches various ways that can happen. One scenario involves war. Deeply conscious of how important it is to hold his party in line, a president can find war useful -- and in a dangerous world, it isn't hard to find real enemies. But a president pushing for war risks the appearance of possessing ulterior motives, or seeming impatient. In early August 1964, for example, Lyndon Johnson stampeded the Senate into the Gulf of Tonkin Resolution and then took the resolution, after his landslide over Goldwater, as Congressional authorization for a military buildup in Vietnam. Any subsequent military or diplomatic defeat following such an apparent manipulation of Congress will spark party factionalism, as the president's party colleagues scramble to keep control. It isn't hard, of course, to see how all this might apply to the current Bush administration. Criticism of Bush's plans for, and commitment to, reconstruction in Iraq influenced his call for a doubling in spending there.

To take a third general type, a new president may have no choice but to affiliate with a weak regime and try to muddle through. He characteristically does that by stepping away from the regime's stale public philosophy, emphasizing instead his administrative competence. Such was the approach of Herbert Hoover and Jimmy Carter. That sort of president is a caretaker of a threadbare public philosophy, doing his best with a bad historical hand, often with great ingenuity, as revisionist scholarship on Hoover has shown -- for instance, the extent to which he had coherent programs for addressing unemployment and collapsing farm prices that foreshadowed key elements of the New Deal.

Yet another kind of president is someone who, like the innovator, repudiates the regime -- but does so when it is still going strong in people's hearts and minds. That kind of oppositional president seeks some "third way," even though other members of his party are convinced that there is little wrong with the reigning political philosophy. He quickly comes to seem disingenuous, even dangerous, to many of his contemporaries.

Andrew Johnson was unilaterally reconstructing the ex-Confederacy in ways that subverted Republican plans made by Congress during the Civil War. He was eventually impeached when he dismissed Secretary of War Edwin Stanton, the cabinet ally of Johnson's Congressional Republican opponents. Stanton's dismissal flouted the Tenure of Office Act giving the Senate say over dismissal of officials whose appointment it had approved. That provided the legal fodder for the impeachment. Richard Nixon advocated many liberal policies -- proposing, for instance, a guaranteed national income, an idea that now seems hopelessly quaint. But he also disturbed and startled many of his opponents by openly regarding them as dangerous enemies warranting surveillance and dirty tricks, and that set the stage for his resignation. Clinton hired the dark prince of triangulation, Dick Morris. Clinton too was impeached and then tried after a desperately zealous independent prosecutor forced him into a public lie, under oath, about his affair with Monica Lewinsky.

Third-way presidents get the worst of both worlds -- seeming untrustworthy both to the partisan opposition and to parts of their own base because they pursue policies that threaten to remove existing party polarities. It is striking that eventually they blunder into some act heinous enough to be plausibly treated as a crime.

In short, there is a simple two-by-two classification undergirding Skowronek's historical account. One dimension of classification is "strength of regime," which ranges from strong and commanding to collapsing and discredited. The other dimension is strength of the president's affiliation with the existing regime. That affiliation can range from none (repudiation, as with Reagan, or opposition, as with Clinton) through weak (Hoover or Carter) to strong (Lyndon Johnson or George W. Bush).

To be sure, a tale of recurring patterns that can be surmised from a two-by-two classification seems very distant from the presidency as it is experienced by the president, and by citizens, day by day. Conscious that a president's personal strengths matter greatly, specialists on the presidency, aided by recent theories of emotional intelligence, sort presidents according to their interpersonal skills. They also analyze the application of formal and informal powers: how presidents fare with Congress under different conditions of party control and by type of policy request, whether they move public opinion when they would like to, and how well they resist the inevitable undertow of public disillusionment. Scholars ask, as well, how the institutional evolution of the executive branch magnifies or frustrates such applications of temperament and skill. Finally, formal legal analysts assess any changes in the balance of executive and legislative power. In other words, in the subfield of presidency studies, most analysts look over the president's shoulder. They hew to the actual experience of being an overworked politician who makes literally thousands of anticipated and unanticipated decisions in the face of enormous ambiguity and uncertainty.

In the end, though, there is no gainsaying the performance of Skowronek's theory. Yes, there are lots of alternative explanations for the major events it has predicted. But that is precisely where the counterintuitive parsimony of Skowronek's theory comes in. By parsimony, political scientists mean successfully explaining the widest possible range of outcomes with the fewest possible variables. In 1993, there was no other coherent theory of presidential politics that anticipated the full range of events that Skowronek's theory did: impeachment of a Clinton-like president; and, if the Republicans recaptured the White House, tax cuts, war, and related outcomes like the news media's doting (at least for a while) on a chief executive who would use the rhetoric of common cause in a time of crisis.

Presidents, Skowronek's theory insists, are highly constrained actors, seemingly fated to replay variations on one of the four roles he outlines. That this seems fatalistic or cyclical -- unlike the complicated lived experience of the presidency -- doesn't lessen the theory's power. If the model works, then the right question to ask is not whether it is realistic, but why it works.

Of course, the prerequisite for such a discussion is adequate recognition that the theory is working -- which is what seems to have eluded Skowronek. Here a subtle scientistic prejudice against his chosen scholarly identity may be in play. If so, the silence about his work raises an important question about the contemporary organization of American political science.

A bit of intellectual history is in order. The sort of work for which Skowronek is known is often called "American political development," or APD. (A disclosure: My own professional identity is closest to APD.) APD scholars roam interpretively across huge swaths of American political history. Their work so far has been qualitative, and thus tends to eschew any number-crunching beyond descriptive statistics.

For many years, APD's critics regularly knocked it as "traditional," and thus not "modern," political science. They often called it "bigthink" -- speculative, broad rumination without any scientific value.

Those days are now happily over. APD is much less controversial than it was when Skowronek and Karen Orren, of the University of California at Los Angeles, founded the subfield's flagship journal, Studies in American Political Development, in 1985. Since then, other journals with the same focus have appeared -- for example, the Journal of Policy History -- and APD work has been published in mainstream refereed journals, too. The outgoing president of the American Political Science Association, Theda Skocpol of Harvard, a widely known social-policy scholar, has played an important role in mainstreaming APD work.

But does that acceptance disguise a lingering refusal to acknowledge that APD scholarship can say as much about the present and future of American politics as other kinds of research? I fear that many political scientists draw a line in their minds between "real," possibly predictive political science -- work that requires lots of data, big computer runs, formal modeling, and regular National Science Foundation funds -- and work that they consider pleasantly stimulating but not scientific enough to serve as a reliable guide to, or predictor of, events. It would be a sad paradox if, while honoring the appearance of science, we let that prejudice block powerful and elegant predictive theories like Skowronek's from coming to the fore.

Rick Valelly is a professor of political science at Swarthmore College.

Copyright © 2003 by The Chronicle of Higher Education

Sapper Answers A Student Query About Sapper Classroom Demeanor Of Late

In message 180 on Sunday, October 26, 2003 9:43pm, A Student writes:

Dear Professor Sapper,

Ive been getting an old feeling that you may be pissed off at our class or maybe just a couple of individuals. I was just wondering because youdon't come to class with the excitement you did at the beggining of the semester. Are you mad at us or just mad at the world. If you don't mind explaining please email ME back. I can't wait for the test.

Thanks.

Sincerely,

A Student

Dear Student,

First, the urological reference belongs on the streets, not in communication between a student and a college teacher. I am old school and I don't use such scatalogical language in the classroom. No references to urine. No references to bull excrement. I may utter a mild blasphemy (damn or hell) from time to time, but no scatology.

In the WebCT Glossary for this course, there is an entry for Grade 131. Likewise, there is an entry for Keep The Customer Satisfied2.

Finally, did you read the latest posting to the WebCT Bulletin Board about the AC HIST student who pursued information from a professor of history at Yale University? That was as positive as I can be. I cannot be positive about Grade 13 behavior or the assumption that students are customers. My classroom is not McDonalds. I don't wear a paper hat and carry a spatula. There is no drive-up window in my classroom. Students are not customers.

The best statement on customers was written by the late Nobel Laureate (Literature)—William Faulkner—when he was forced to resign (or be fired) as a postal clerk in the campus post office at the University of Mississippi. Faulkner wrote:

I reckon I'll be at the beck and call of folks with money all my life,

but thank God I won't ever again have to be at the beck and call of

every son of a bitch who's got two cents to buy a stamp.

Thanks for your concern (I think).

-Dr. Sapper

1Grade 13 - Just as Peter Pan lived in Never-Never-Land, Dorothy and Toto lived (for a time) in the Land of Oz, and Clark Kent lived in Smallville, there are some Amarillo College students who think they are in Grade 13. This imaginary place is an extension of their experience in Grades K-12. Amarillo COLLEGE is not an extension of Happy High High School or Ridgemont High School, or Sleepy Hollow Elementary School. Students who attend Amarillo College are ADULTS. As such, they are addressed formally in Dr. Sapper's HIST classes. No nicknames, no diminutives, no jocular familiarity. Students who want a Grade 13 experience should enroll in another HIST class somewhere else, perhaps Amarillo College. There will be ZERO TOLERANCE for disruptive behavior: idle chit-chat, whistling, walking out of class before the class is dismissed are but a few examples of Grade 13 behavior that will not be tolerated in Dr. Sapper's HIST classes. A full list of disruptive, Grade 13 behaviors can be found in the WebCT Course Menu (Pet Peeves). FINAL WARNING: ZERO TOLERANCE MEANS EXACTLY THAT! (Spring 2003)

2Keep The Customers Satisfied - In 1970, Paul Simon wrote

It's the same old story Everywhere I go, I get slandered, Libeled, I hear words I never heard In the Bible. And I'm so tired,I'm oh

so tired, But I'm trying to keep my customers satisfied, Satisfied.

And Dr. Sapper was never the same, just getting more tired by the semester. (Spring 2001)

Monday, October 27, 2003

I KNOW I FEEL SAFER ABOUT AIRPORT SECURITY

This past July on Sunday, I flew back to Amarillo—following the celebration of my son's wedding—from Milwaukee, WI. I flew into Billy Mitchell International Airport on that previous Friday without a single bleep. That was from Amarillo via Dallas and on to Milwaukee (eventually) past all of the TSA screeners. On that fateful Sunday, the screener in Milwaukee confiscated a corkscrew and a tiny penknife after going through ALL of my carryon stuff. Now, a student at Guilford College in NC—Nathaniel T. Heatwole—is getting the book thrown at him by the Ashcroft Justice Department for carrying box cutters and the harmless equivalent of plastic explosive material (modeling clay) and bottles of bleach aboard Southwest Airlines flights out of Raleigh-Durham and Baltimore. What is wrong with this picture? Heatwole sent warnings IN ADVANCE to the FBI. Duh! Ashcroft is even dumber than W. At least W didn't lose an election to a dead man. In the meantime, here is Ben Sargent's take on airport security in October 2003. I have a question for the Democrat nominee (whoever he/she is) in 2004. At the first debate, ask W: Should we feel safer than we did 4 years ago? See how the genius handles that one! It gets worse and worse in Iraq. It gets worse and worse in this country. Where is bin Laden? Where is Hussein? Nathaniel Heatwole deserves a commendation for exposing the sorry state of airport security in 2003. If this be (fair & balanced) sedition, make the most of it!

Sunday, October 26, 2003

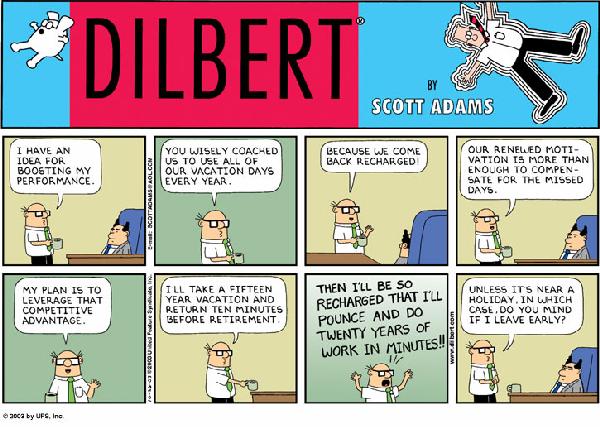

Wally Is Right On About Retirement!

Wally—Dilbert's co-worker—spends most of his time walking around with a coffeee mug. The pointy-haired boss reminds me of a lot of the administrators at the Collegium. One bright idea after another. The idea of a 15-year vacation and returning 10 minutes prior to retirement REALLY appeals to me! Wally's idea of returning so recharged that he will do 20 years of work in 10 minutes is nothing short of genius. If this be (fair & balanced) malingering, so be it!

Copyright © 2003 Scott Adams

Let's Hear It For Solo Consumers!

I always KNEW that I would be a cutting-edge guy. Now, I am on the cutting-edge. This could be the greatest population shift since the baby boom. Or, not. More likely, I am on the blunt edge of change and can't tell the difference. If this be (fair & balanced) demography, so be it.

[x Christian Science Monitor]

The power of 1

About one-fourth of Americans now live alone. As their numbers grow, these singles are becoming a significant cultural and economic force.

By Marilyn Gardner | Staff writer of The Christian Science Monitor

As Laura Peet put the finishing touches on plans for a vacation in Italy this month, her anticipation ran high. For years she had dreamed of visiting Tuscany, Rome, and the Cinque Terra. Now the trip was at hand, with just one thing missing: someone to share it with her.

"I was holding out on Italy as a honeymoon spot," says Ms. Peet, a marketing consultant in New York. "That hasn't happened yet, so I'm going for my birthday."

Score one for independence and pragmatism, the hallmarks of 21st-century singlehood. In numbers and attitudes, people like Peet are creating a demographic revolution that is slowly and quietly reshaping the social, cultural, and economic landscape.

In 1940, less than 8 percent of Americans lived alone. Today that proportion has more than tripled, reaching nearly 26 percent. Singles number 86 million, according to the Census Bureau, and virtually half of all households are now headed by unmarried adults.

Signs of this demographic revolution, this kingdom of singledom, appear everywhere, including Capitol Hill.

Last month the Census Bureau reported that 132 members of the House of Representatives have districts in which the majority of households are headed by unmarried adults.

In Hollywood, television programs feature singles game shows, reality shows, sitcoms, and hits such as "Sex and the City."

Read all about it

Off-screen, whole forests are being felled to print a burgeoning genre of books geared to singles, primarily women. Nonfiction self-help books, written in breezy, upbeat tones, serve as cheerleaders for singlehood and advice-givers on how to find a marriage partner.

A category of fiction dubbed Chick Lit spans everything from Bridget Jones to titles such as "Pushing 30." Harlequin Books publishes a special imprint called Red Dress Ink, billed as "stories that reflect the lifestyles of today's urban, single woman."

In June, a panel at Printer's Row Book Fair in Chicago discussed "The Fiction of Singledom." The well-attended event attracted a predominantly female audience, says panelist Steve Almond of Somerville, Mass., whose writings include short stories about singles.

Events like this, together with books for singles, dating services and websites, personals ads, and five-minute dating sessions, add up to big business, so sprawling that it cannot be quantified. Mr. Almond calls it the "commercialization of romantic connections."

To help the unattached make connections, even museums are getting into the act. As one example, Boston's Museum of Fine Arts holds a monthly gathering called First Friday, appealing to singles who want more cultured, upscale places to mingle than clubs and bars.

Singles with discretionary time to work out at the gym feed a thriving fitness culture. Travel agencies and special tour groups are also capitalizing on this market. Many travelers, like Peet, go alone. Within the United States, singles take 27 percent of all trips, according to the Travel Industry Association of America.

In supermarkets, the giant economy size still exists, but sharing shelf space with it is a newer invention: single- serving sizes. Mike Deagle of the Grocery Manufacturers of America calls it a "significant trend," although no statistics yet track those changes.

Even restaurants are finding ways to woo the growing number of singles who eat out. Marya Charles Alexander of Carlsbad, Calif., publishes an online newsletter, SoloDining.com, with a dual purpose. It urges restaurants to make solo diners feel welcome - no fair banishing them to Siberia, next to the swinging kitchen door. And it encourages customers eating alone to find pleasure in the experience.

"I do think 9/11 has definitely affected the way singles feel about their quality of life," Ms. Alexander says. "They're going to enjoy right now. More and more of them are saying, 'I am living my life today. I'm not going to be staying home, not going to be shackled by whatever people think about me eating out by myself.' "

A scattering of restaurants offer communal tables, enabling those arriving alone to share conversation. As Alexander notes, "Times are not as rosy as they were in the past for restaurateurs. It makes good business sense to cater to these people who are hungry and looking for an invitation to eat out."

For those setting a table for one at home, other help exists. Retirement communities are holding classes with cheerful titles such as "Cooking for One Can Be Fun," and adult education programs offer Cooking 101, geared to those living alone.

Singles are also nesting in record numbers. Traditionally, one-third of home buyers are single, with women buying houses at double the rate of men, according to the National Association of Realtors. Peet recently bought a house in rural Connecticut, becoming one of the 6 percent of single women who own second homes. In 2001, 10 percent of second homes were bought by single women and 10 percent by single men. All these new households in turn are helping to feather the nests of businesses that sell home furnishings, kitchenware, and lawn equipment.

Unmarried Americans are also changing the face of organized religion. Because younger singles often do not attend regularly, some churches and temples are creating special services to attract them. At Temple Kehillath Israel in Brookline, Mass., a monthly Shabbat service and dinner on Friday evening targets the generation between 22 and 32. And St. Paul's Cathedral (Episcopal) in Boston holds a Sunday evening gathering for those in their 20s and 30s.